Reference manual

Soupault (soup-oh) is an HTML manipulation tool.

It can work either as a static site generator that builds a complete site from a page template and a directory with page body files, or an HTML processor for an existing website.

Soupault is based on HTML element tree rewriting. Pretty much like client-side DOM manipulation, just without the browser or interactivity.

You can tell it to do things like “insert contents of footer.html file into

<div id="footer"> no matter where that div is in the page”,

or “use the first <h1> element in the page for the page title”.

- Full HTML awareness

-

Soupault is aware of the full page structure. It can find and manipulate any element using

CSS3 selectors like

p,div#content, ora.nav, no matter where it is in the page. There is no need “front matter”— all metadata can be extracted from HTML itself. - No themes

-

Any page can be a soupault theme, you just tell it where to insert the content.

By default it inserts page content into the

<body>element, but it can be anything identifiable with a CSS selector. You decide how much is the “theme” and how much is the content. - Built for text-heavy, nested websites

- Tables of contents, footnotes, and breadcrumbs are supported out of the box. Existing element id's are respected, so you can make table of contents and footenote links permanent and immune to link rot, even if you change the heading text or page structure.

- Preserves your content structure

- Soupault can mirror your directory structure exactly, down to file extensions. You can use it to enhance an existing website without breaking any links, or gradually migrate from an HTML processor to the website generator workflow.

- Any input format

- HTML is the primary format, but you can use anything that can be converted to HTML. Just specify preprocessors for files with certain extensions.

- Easy to install

- Soupault is a single executable file with no dependencies. Just unpack the archive and it's ready to use.

- Fast

- Soupault is written in OCaml and compiled to native code on all platforms. This website takes less than 0.5 second to build.

- Extensible

- You can add your own HTML rewriting logic with Lua scripts.

For a very quick start:

- Create a directory for your site.

-

Run

soupault --init. It will create a page template intemplates/main.html, defaultsoupault.confconfig file, and a directory for pages (site). -

Drop some HTML files into the

sitedirectory. -

Run

soupaultin that directory. -

Look in

build/for the result.

For details, read the documentation below.

→ Overview

Soupault is named after the French dadaist and surrealist writer Philippe Soupault because it's based on the Lambda Soup library, which is a reference to the Beautiful Soup library, which is a reference to the Mock Turtle chapter from Alice in Wonderland (the best book on programming for the layman according to Alan Perlis), which is a reference to an actual turtle soup imitation recipe, and also a reference to tag soup, a derogatory term for malformed HTML, which is a reference to... in any case, soupault is not the French for a stick horse, for better or worse, and this paragraph is the only nod to dadaism in this document.

Soupault is quite different from other website generators. Its design goals are:

- No special syntax inside pages, only plain old semantic HTML.

- No templates or themes. Any HTML page can be a soupault theme.

- No front matter.

- Usable as a postprocessor for existing website and friendly to sites with unique pages.

People have written lots of static website generators, but most of them are variations on a single theme: take a file with some metadata (front matter) followed by content in a limited markup format such as Markdown and feed it to a template processor.

This approach works well in many cases, but has its limitations. A template processor is only aware of its own tags, but not of the full page structure. The front matter metadata is the only part of the page source it can use, and it can only insert it into fixed locations in the template. The part below the front matter is effectively opaque, and formats like Markdown simply don't allow you to add machine-readable annotations anyway.

Well-formed HTML, however, is a machine-readable format, and the libraries that can handle it existed for a long time.

As shown by microformats, you can embed a lot of information in it. More importantly,

unlike front matter metadata, HTML metadata is multi-purpose: for example, you can use the id

attribute for CSS styling and as a page anchor, as well as a unique element identifier for data extraction

or HTML manipulation programs.

With soupault, it's possible to take advantage of the full HTML markup and even make every page on your website look different rather than built from the same template, and still have an automated workflow.

You can also use Markdown/reStructuredText/whatever if you specify preprocessors. Soupault will automatically run a preprocessor before parsing your page, though you'll miss some finer points like footnotes if you go that way.

→ How soupault works

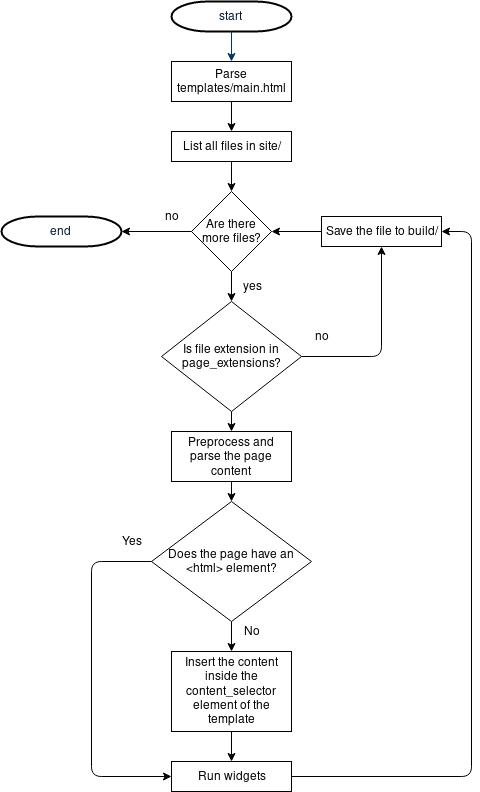

In the website generator mode (the default), soupault takes a page “template”—an HTML file devoid of content, parses it into an element tree, and locates the content container element inside it.

By default the content container is <body>, but you can use any selector:

div#content (a <div id="content"> element), article (an HTML5 article element),

#post (any element with id="post") or any other valid CSS selector.

Then it traverses your site directory where page source files are stored, takes a page file, and parses it into an HTML element tree too.

If the file is not a complete HTML document (doesn't have an <html> element in it),

soupault inserts it into the content container element of the template. If it is a complete page, then it goes straight to the next step.

The new HTML tree is then passed to widgets—HTML rewriting modules that manipulate it in different ways: incude other files or outputs of external programs into specific elements, create breadcrumbs for your page, they may delete unwanted elements too.

Processed pages are then written to disk, into a directory structure that mirrors your source directory structure.

Here is a simplified flowchart:

→ Performance

Despite its heavy-handed approach, soupault is reasonably fast. With a config that includes ToC, footnotes, file inclusion, breadcrumbs, and built-in section index generator, it can process 1000 copies of its own documentation page in about 14 seconds.1 Small websites take less than a second to build.

For comparison, in a simplified “read-parse-prettify-write” test with 1000 copies of this document, CPython/BeautifulSoup takes about 20 seconds to complete.

→ Why use soupault?

→ If you are starting a website

All website generators provide a default theme, but making new themes can be tricky. With soupault, there are no intermediate steps between writing your page layout and building your website from it.

In the simplest case you can just create a page skeleton in templates/main.html with an empty <body>,

add a bunch of pages to site/ (site/about.html, site/cv.html, ...)

and run the soupault command in that directory.

→ If you already have a website

If you have a website made of handwritten pages, you can use soupault as a drop-in automation tool.

Just copy your pages to the site directory, and you can already take advantages of features like

tables of content, without modifying any of your pages.

Then you can gradually migrate to using a shared template, if you want to. Take a page, remove the content from it, save that empty page

to templates/main.html, and start stripping pages in site down to their content.

Pages that don't have an <html> element will be treated as page bodies and inserted in the template,

but other pages will only be processed by widgets.

If you have an existing directory structure that you don't want to change because it will break links,

soupault can mirror it exactly, with the clean_urls = false config option.

It also preseves original file names and extensions in that mode.

→ What can it do?

Soupalt comes with a bunch of built-in widgets that can:

- Include external files, HTML snippets, or output of external programs into an element.

- Set the page title from text in some element.

- Generate breadcrumbs.

- Move footnotes out of the text.

- Insert a table of contents.

- Delete unwanted elements.

For example, here's a config for the title widget that sets the page title.

[widgets.page-title] widget = "title" selector = "#title" default = "My Website" append = " — My Website"

It takes the text from the element with id="title" and copies it to the <title>

tag of the generated page. It can be any element, and it can be a different element in every page.

If you use <h1 id="title"> in site/foo.html and <strong id="title">

in another, soupault will still find it.

It's just as simple to prevent something from appearing on a particular page. Just don't use an element that a widget uses for its target, and the widget will not run on that page.

Another example: to automatically include content of a templates/nav-menu.html into

the <nav> element, you can put this into your soupault.conf file:

[widgets.nav-menu] widget = "include" selector = "nav" file = "templates/nav-menu.html"

→ What it cannot do

By design:

- Development web server

-

There are plenty of those, even

python3 -m http.serveris perfectly good for previews. - Deployment automation.

- Same reason, there are lots of tools for it.

Because I don't need it and I'm not sure if anyone wants it or how it will fit:

- Asset management

- Incremental builds

- Multilingual sites

→ Installation

→ Binary release packages

Soupault is distributed as a single, self-contained executable, so installing it from a binary release package it trivial.

You can download it from files.baturin.org/software/soupault. Prebuilt executables are available for Linux (x86-64, statically linked), macOS (x86-64), and Microsoft Windows (32-bit, Windows 7 and newer).

Just unpack the archive and copy the executable wherever you want.

Prebuilt executables are compiled with debug symbols.

It makes them a couple of megabytes larger than they could be, but you can get better

error messages if something goes wrong. If you encounter an internal error, you can get an exception trace

by running it with OCAMLRUNPARAM=b environment variable.

→ Building from source

If you are familiar with the OCaml programming language, you may want to install from source.

Since version 1.6, soupault is available from the opam repository.

If you already have opam installed, you can install it with opam install soupault.

If you want the latest development version, the git repository is at github.com/dmbaturin/soupault.

To build a statically linked executable for Linux, identical to the official one, first install a +musl+static+flambda

compiler flavor, then uncomment the (flags (-ccopt -static)) line in src/dune.

→ Using soupault on Windows

Windows is a supported platform and soupault includes some fixups to account for the differences. This document makes a UNIX cultural assumption throughout, but most of the time the same configs will work on both systems. Some differences, however, require user intervention to resolve.

If a file path is only used by soupault itself, then the UNIX convention will work, i.e.

file = 'templates/header.html' and file = 'templates\header.html' are both valid options

for the include widget. However, if it's passed to something else in the system, then you must

use the Windows convention with back slashes. This applies to the preprocessors, the command option of the

exec widget, and the index_processor option.

So, if you are on Windows, remember to adjust the paths if needed, e.g.:

[widgets.some-script] widget = 'exec' command = 'scripts\myscript.bat' selector = 'body'

Note that inside double quotes, the back slash is an escape character, so you should either use single quotes for such paths

('scripts\myscript.bat') or use a double back slash ("scripts\\myscript.bat").

→ Getting started

→ Create your first website

Soupault has only one file of its own: the config file soupault.conf.

It does not impose any particular directory layout on you. However, it has default

settings that allow you to run it unconfigured.

You can initialize a simple project with default configuration using this command:

$ soupault --init

It will create the following directory structure:

. ├── site │ └── index.html ├── templates │ └── main.html └── soupault.conf

-

The

site/is a site directory where page files are stored. -

The

templates/directory is just a convention, soupault usestemplates/main.htmlas the default page template. -

soupault.confis the config file.

Now you can build your website. Just run soupault in your website directory,

and it will put the generated pages in build/. Your index page will become

build/index.html.

By default, soupault inserts page content into the <body> element

of the page template. Therefore, from the default template:

<html> <head></head> <body> <!-- your page content here --> </body> </html>

and the default index page source that is <p>Site powered by soupault</p>

it will make this page:

<!DOCTYPE html> <html> <head></head> <body> <p> Site powered by soupault </p> </body> </html>

You can use any CSS selector to determine where your content goes. For example,

you can tell soupault to insert it into <div id="content>

by changing the content_selector in soupault.conf

to content_selector = "div#content".

→ Page files

Soupault assumes that files with extensions .html, .htm, .md, .rst, and .adoc are pages

and processes them. All other files are simply copied to the build directory.

If you want to use other extensions, you can change it in soupault.conf.

For example, to add .txt to the page extension list, use this option:

[settings] page_file_extensions = ["htm", "html", "md", "rst", "txt"]

Remember that for files in formats other than HTML, you also need to specify a convertor, simply adding an extension to the list is not enough.

→ Clean URLs

Soupault uses clean URLs by default. If you add another page to site/,

for example, site/about.html, it will turn into build/about/index.html

so that it can be accessed as https://mysite.example.com/about.

Index files, by default files whose name is index are simply copied to the target

directory.

site/index.html → build/index.html site/about.html → build/about/index.html site/papers/theorems-for-free.html → build/papers/theorems-for-free/index.html

Note: a page named foo.html and a section directory named foo/ is

undefined behaviour when clean URLs are used. Don't do that to avoid unpredictable results.

This is what soupault will make from a source directory:

$ tree site/ site/ ├── about.html ├── cv.html └── index.html $ tree build/ build/ ├── about │ └── index.html ├── cv │ └── index.html └── index.html

→ Disabling clean URLs

If you've had a website for a long time and there are links to your page that will break if you change the URLs, you can make soupault mirror your site directory structure exactly and preserve original file names.

Just add clean_urls = false to the [settings] table of your

soupault.conf file.

[settings] clean_urls = false

→ Soupault as an HTML processor

If you want to use soupault with an existing website and don't want the template functionality,

you can switch it from a website generator mode to an HTML processor more where it doesn't use

a template and doesn't require the default_template to exist.

Recommended settings for the preprocessor mode:

[settings] generator_mode = false clean_urls = false

→ Page templates

Some people want all their pages to have a consistent look, and then having just a default_template

option works perfectly. Some people prefer to give every page a unique look, and then disabling generator mode

is a right thing to do. Yet a third category of people want just some pages or sections to look different.

For this use case, soupault supports multiple page templates. You can define them in the [templates]

section.

[templates.serious-template] file = "templates/serious.html" section = "serious-business" [templates.fun-template] file = "templates/fun.html" path_regex = "(.*)/funny-(.*)"

As of soupault 1.12, there is no way to specify a content_selector option per template,

and they all will use the content_selector option from [settings].

Page/section targeting options are discussed in detail in the widgets section.

Note that proper template targeting is your responsibility. Omitting the page, section, path_regex option will not cause errors,

but soupault may use a wrong template for some pages. If that happens, make your targeting options more specific.

→ Nested structures

A flat layout is not always desirable. If you want to create site sections, just add some

directories to site/. Subdirectories are subsections, their subdirectories

are subsubsections and so on—they can go as deep as you want. Soupault will process

them all recursively and recreate the directories in build/.

site/ ├── articles │ ├── goto-considered-harmful.html │ ├── index.html │ └── theorems-for-free.html ├── about.html ├── cv.html └── index.html build/ ├── about │ └── index.html ├── articles │ ├── goto-considered-harmful │ │ └── index.html │ ├── theorems-for-free │ │ └── index.html │ └── index.html ├── cv │ └── index.html └── index.html

Note that if your section does not have an index page, soupault will not create it automatically. If you want a page to exist, you need to make it.

→ Configuration

The default directory and file paths soupault --init creates are

not fixed, you can change any of them. If you prefer different names, or you

have an existing directory structure you want soupault to use, just edit

the soupault.conf file. This is [settings] section of the default config:

[settings] # Stop on page processing errors? strict = true # Display progress? verbose = false # Display detailed debug output? debug = false # Where input files (pages and assets) are stored. site_dir = "site" # Where the output goes build_dir = "build" # Files inside the site/ directory can be treated as pages or static assets, # depending on the extension. # # Files with extensions from this list are considered pages and processed. # All other files are copied to build/ unchanged. # # Note that for formats other than HTML, you need to specify an external program # for converting them to HTML (see below). page_file_extensions = ["htm", "html", "md", "rst", "adoc"] # Files with these extensions are ignored. ignore_extensions = ["draft"] # Soupault can work as a website generator or an HTML processor. # # In the "website generator" mode, it considers files in site/ page bodies # and inserts them into the empty page template stored in templates/main.html # # Setting this option to false switches it to the "HTML processor" mode # when it considers every file in site/ a complete page and only runs it through widgets/plugins. generator_mode = false # Files that contain an element are considered complete pages rather than page bodies, # even in the "website generator" mode. # This allows you to use a unique layout for some pages and still have them processed by widgets. complete_page_selector = "html" # Website generator mode requires a page template (an empty page to insert a page body into). # If you use "generator_mode = false", this file is not required. #default_template = "templates/main.html" # Page content is inserted into a certain element of the page template. This option is a CSS selector # used for locating that element. # By default the content is inserted into the content_selector = "body" # Soupault currently doesn't preserve the original doctype declaration # and uses the HTML5 doctype by default. You can change it using this option. doctype = "" # Enables or disables clean URLs. # When false: site/about.html -> build/about.html # When true: site/about.html -> build/about/index.html clean_urls = true # Since 1.10, soupault has plugin auto-discovery # A file like plugins/my-plugin.lua will be registered as a widget named my-plugin plugin_discovery = true plugin_dirs = ["plugins"]

Note that if you create soupault.conf file before running

soupault --init, it will use settings from that file instead

of default settings.

In this document, whenever a specific site or build dir has to be mentioned, we'll use default values.

If you misspell and option or make another mistake, soupault will warn you about an invalid option and try to suggest a correction.

Note that the config is typed and wrong value type has the same effect as missing

option. All boolean values must be true or false

(without quotes), all integer values must not have quotes around numbers,

and all strings must be in single or double quotes.

→ Custom directory layouts

If you are using soupault as an HTML processor, or using it as a part of a CI pipeline, the typical website generator approach with a single “project directory” may not be optimal.

You can override the location of the config using an environment variable SOUPAULT_CONFIG.

You can also override the locations of the source and destination directories with --site-dir

and --build-dir options. Thus it's possible to run soupault without a dedicated project

directory at all:

SOUPAULT_CONFIG="something.conf" soupault --site-dir some-input-dir --build-dir some-other-dir

→ Page preprocessors

Soupault has no built-in support for formats other than HTML, but you can use any format with it if you specify an appropriate page preprocessor.

Any preprocessor that takes page file as its argument and outputs the result to

stdout can be used.

For example, this configuration will make soupault call a program called

cmark on the page file if its extension is .md.

[preprocessors] md = "cmark"

The table key can be any extension (without the dot), and the value is a command. You can specify as many extensions as you want.

Preprocessor commands are executed in shell, so it's fine to use relative paths and add arguments. Page file name will be appended to the command string.

By default soupault assumes that files with extensions .md, .rst, .adoc

are pages. If you are using another extension, you need to add it to the

page_file_extensions list in the [settings] section,

else it will assume it's an asset and copy it unmodified, even if you configure a preprocessor

for that extension.

→ Automatic section and site index

Having to add links to all pages by hand can be a tedious task. Nothing beats a carefully written and annotated section index, but it's not always practical.

Soupault can automatically generate a section index for you. While it's not a blog generator and doesn't have built-in features for generateing indices of pages by date, category etc., it can save you time writing a section index pages by hand.

Metadata is extracted directly from pages using selectors you specify in the config. It's more than possible to use a different element for excerpt in every page, not just the first paragraph, without having to duplicate it in the “front matter”. It doesn't even have to be text either. Same goes for other fields.

To use automatic indexing, you still need an index page in your section.

It can be empty, but it must be there. Default index page name is index,

so you should make a page like site/articles/index.html first.

Then enable indexing in the config. All indexing options are in tne [index] table.

[index] index = true

By default, soupault will append the index to the <body> element.

You can tell it to insert it anywhere you want with the index_selector

option, e.g. index_selector = "div#index".

There are a few configurable options. You can specify element selectors for page title, excerpt, date, and author.

These are all available options:

[index] # Whether to generate indices or not # Default is false, set to true to enable index = false # Where to insert the index index_selector = "body" # Page title selector index_title_selector = "h1" # Page excerpt selector index_excerpt_selector = "p" # Page date selector index_date_selector = "time" # By default entries are sorted by date in ascending order (oldest to newest) # If you are making a blog, you can set this to true to sort from newest to oldest newest_entries_first = false # Date format for sorting # Default %F means YYYY-MM-DD # For other formats, see http://calendar.forge.ocamlcore.org/doc/Printer.html index_date_format = "%F" # Page author selector index_author_selector = "#author" # Mustache template for index entries index_item_template = "<div> <a href=\"{{url}}\">{{{title}}}</a> </div>" # External index generator # There is no default index_processor = # When false, causes soupault to ignore index_item_template and index_processor # options (and their default values). Useful if you want to use named views exclusively. use_default_view = true

→ Built-in index generator

Since version 1.6, soupault includes a built-in index generator that uses Mustache templates.

The following built-in fields are supported: url, title, excerpt, date, author.

For a simple blog feed, you can use something like this:

index_item_template = """

<h2><a href="{{url}}">{{title}}</a></h2>

<p><strong>Last update:</strong> {{date}}.</p>

<p>{{{excerpt}}}</p>

<a href="{{url}}">Read more</a>

"""

If you define custom fields, they also become available to the Mustache renderer.

An easy way to see what fields are available to the built-in and external generators is to run soupault --debug,

which prints a JSON dump of the index data among other things.

→ External index generators

The built-in index generator simply copies elements from the page to the index.

You can easily end up with a rather odd-looking index, especially if you are

using different elements on every page and identify them by id

rather than element name.

Generating indices and blog feeds is where template processors really shine. Everyone has different preferences though, so instead of having a built-in template processor, soupault supports exporting the index to JSON and feeding it to an external program.

JSON-encoded index is written to program's standard input, as a single line.2 It's a list of objects with following fields:

-

url -

Absolute page URL path, like

/papers/simple-imperative-polymorphism -

nav_path -

A list of strings that represents the logical section path, e.g. for

/pictures/cats/grumpyit will be["pictures", "cats"]. -

title, date, excerpt, author -

Metadata extracted from the page. Any of them can be

null. -

page_file - Original page file path.

Here's an example of very simple indexing setup that will take the first h1

of every page in a section and make an unordered list of links to them. The external

processor will use Python and Mustache

templates.

First, create an index.html page in every section and include a

<div id="index"> element in it.

Then write this to your config file:

[index] index = true index_selector = "#index" index_processor = "scripts/index.py" index_title_selector = "h1"

Then install the pystache library and save this script to scripts/index.py:

#!/usr/bin/env python3 import sys import json import pystache template = """ <li><a href="{{url}}">{{title}}</a></li> """ renderer = pystache.Renderer() input = sys.stdin.readline() index_entries = json.loads(input) print("<ul class=\"nav\">") for entry in index_entries: print(renderer.render(template, entry)) print("</ul>")

Index processors are not required to output anything. You can as well use them to save the index data somewhere and create taxonomies and custom indices from it with another script, then re-run soupault to have them included in the pages.3

→ Multiple index views

Since version 1.7, soupault supports multiple index “views” that allows you to present the same index data in different ways.

For example, you can have a blog feed in /blog, but a simple list or pages in every other section.

Views are defined in the [index.views] table. You can use either normal or inline table syntax, from TOML's point of view

it's the same. Example:

[index.views.title-and-date] index_selector = "div#index-title-date" index_item_template = "<li> <a href=\"{{url}}\">{{{title}}}</a> ({{date}})</li>" [index.views.custom] index_selector = "div#custom-index" index_processor = 'scripts/my-index-generator.pl'

Which view is used is determined by the index_selector option. In this example, if your section index page

has a <div id="index-title-date">, soupault will insert an index generated by the title-and-date view,

and if it has a <div id="index-custom">, it will insert the output of scripts/my-index-generator.pl.

If your page has both elements, both index views will be inserted in their containers. This may be useful if you want to include different views in the same page, e.g. show articles grouped by date and by author.

Note that defining custom views doesn't automatically make soupault ignore index_item_template and index_processor

options from the [index] table. Since the index_item_template option has a default value, and index_selector

defaults to "body", you may end up with an unwanted index at the top of your page if you leave the global index_selector

option undefined. If you want to use named views exclusively, add use_default_view = true to the [index] table.

→ Custom fields

Built-in fields should be enough for a typical blog taxonomy, but it's possible to add custom fields to your JSON index data.

Custom field queries are defined in the [index.custom_fields] table.

Table keys are field names as they will appear in the exported JSON.

Their values are subtables with required selector field

and optional select_all parameters.

You can also specify default value for a field that will be used if matching element

is not found, using default option. For fields with select_all

flag, default value is ignored.

[index.custom_fields] category = { selector = "span#category", default = "misc" } tags = { selector = ".tag", select_all = true }

In this example, the category field will contain the inner HTML

of the first <span id="category"> element even if there's

more than one in the page (if there are none, it will be set to "misc").

The tags field will contain a list of contents of all elements with class="tag".

→ Exporting site index to JSON

The index processor invoked with the index_processor option

receives the index of the current section. It doesn't include subsections.

Since the site directory is processed top to bottom, the site/index.html

page would not get the global site index either.

If you want to create your own taxonomies from the metadata imported from pages, create a global site index, or an index of a section and all its subsections, you can export the aggregated index data to a file for further processing. Add this option to your index config:

[index] dump_json = "path/to/file.json"

This way you can use a TeX-like workflow:

- Run soupault so that index file is created.

-

Run your custom index generator and save generated taxonomy pages to

site/. - Run soupault one more time to have them included in the build.

→ Behaviour

As of 1.6, generated index is always inserted before any widgets have run, so that the HTML produced by index generators can be processed by widgets.

Metadata extraction happens as early as possible. By default, it happens before any widgets have run,

to avoid adverse interaction with widgets. However, if you want data inserted by widgets to be available

in the index, you can make soupault do the extraction after certain widgets with a extract_after_widgets = ["foo", "bar"]

option in the [index] table.

Note that it doesn't mean no other widgets will run before metadata is extracted. It only means metadata will not be extracted

until widgets specified in the extract_after_widgets option have run. So, if you want a widget to run only

after metadata is extracted, you should specify all those widgets in its dependencies. There is no easier way to do that now.

→ Limiting index extraction to pages or sections

Since soupault 1.9, you can extract index data only from some pages/sections, or exclude specific pages/sections from indexing. The options are the same as for widgets.

→ Extracting the index without generating pages

Since 1.9, soupault supports --index-only option. In the index-only mode, it will extract the index data from pages

and save it in JSON, but will not generate any pages. It will only run widgets that must run before index extraction.

if you have extract_after_widgets option configured.

→ Widgets

Widgets provide additional functionality. When a page is processed, its content is inserted into the template, and the resulting HTML element tree is passed through a widget pipeline.

→ Widget behaviour

Widgets that require a selector option first check if there's an element matching

that selector in the page, and do nothing if it's not found, since they wouldn't

have a place to insert their output.

This way you can avoid having a widget run on a page simply by omitting the element it's looking for.

If more than one element matches the selector, the first element is used.

→ Widget configuration

Widget configuration is stored in the [widgets] table. The TOML syntax

for nested tables is [table.subtable], therefore, you will have entries

like [widgets.foo], [widgets.bar] and so on.

Widget subtable names are purely informational and have no effect, the widget type

is determined by the widget option. Therefore, if you want to use

a hypothetical frobnicator widget, your entry will look like:

[widgets.frobnicate] widget = "frobnicator" selector = "div#frob"

It may seem confusing and redundant, but if subtable name defined the

widget to be called, you could only have one widget of the same type,

and would have to choose whether to include the header or the footer

with the include widget for example.

→ Choosing where to insert the output

By default, widget output is inserted after the last child element in its container.

If you have a designated place in your page for the widget output,

e.g. leave an empty <div id="header"> for the header,

then this detail is unimportant.

However, if you are modifying existing pages or just want more control and flexibility,

you can specify the insert position using the action option.

For example, you can insert a header file before the first element in the page <body>:

[widgets.insert-header] widget = "include" file = "templates/header.html" selector = "body" action = "prepend_child"

Or insert a table of contents before the first <h1> element (it a page has it):

[widgets.table-of-contents] widget = "toc" selector = "h1" action = "insert_before"

Possible values of that option are: prepend_child, append_child, insert_before, insert_after, replace_element, replace_content.

→ Limiting widgets to pages or sections

If the widget target comes from the page content rather than the template,

you can simply not include any elements matching its selector

option.

Otherwise, you can explicitly set a widget to run or not run on specific pages or sections.

All options from this section can take either a single string, or a list of strings.

→ Limiting to pages or sections

There are page and section options that

allow you to specify exact paths to specific pages or sections.

Paths are relative to your site directory.

The page option limits a widget to an exact page file,

while the section option applies a widget to all

files in a subdirectory.

[widgets.site-news] # only on site/index.html and site/news.html page = ["index.html", "news.html"] widget = "include" file = "includes/site-news.html" selector = "div#news" [widgets.cat-picture] # only on site/cats/* section = "cats" widget = "insert_html" html = "<img src=\"/images/lolcat_cookie.gif\" />" selector = "#catpic"

→ Excluding sections or pages

It's also possible to explicitly exclude pages or sections.

[widgets.toc] # Don't add a TOC to the main page exclude_page = "index.html" ... [widgets.evil-analytics] exclude_section = "privacy" ...

→ Using regular expressions

When nothing else helps, path_regex and exclude_path_regex options

may solve your problem. They take a Perl-compatible regular expression (not a glob).

[widgets.toc] # Don't add a TOC to any section index page exclude_path_regex = '^(.*)/index\.html$' ... [widgets.cat-picture] path_regex = 'cats/'

→ Widget processing order

The order of widgets in your config file doesn't determine their processing order. By default, soupault assumes that widgets are independent and can be processed in arbitrary order. In future versions they may even be processed in parallel, who knows.

This can be an issue if one widget relies on putput from another. In that case,

you can order widgets explicitly with the after parameter.

It can be a single widget (after = "mywidget"after = ["some-widget", "another-widget"]).

Here is an example. Suppose in the template there's

a <div id="breadcrumbs"> where breadcrumbs are inserted by the

add-breadcrumbs widget. Since there may not be breadcrumbs if the

page is not deep enough, the div may be left empty, and that's not

neat, so the cleanup-breadcrumbs widget removes it.

## Breadcrumbs [widgets.add-breadcrumbs] widget = "breadcrumbs" selector = "#breadcrumbs" # <omitted> ## Remove div#breadcrumbs if the breadcrumbs widget left it empty [widgets.cleanup-breadcrumbs] widget = "delete_element" selector = "#breadcrumbs" only_if_empty = true # Important! after = "add-breadcrumbs" </omitted>

→ Limiting widgets to “build profiles”

Sometimes you may want to enable certain widgets only for some builds. For example, include analytics scripts only in production builds. It can be done with “build profiles”.

For example, this way you can only include includes/analytics.html file in your pages

for a build profile named “live”:

[widgets.analytics] profile = "live" widget = "include" file = "includes/analytics.html" selector = "body"

Soupault will only process that widget if you run soupault --profile live. If you run

soupault --profile dev, or run it without the --profile option, it will ignore that widget.

→ Built-in widgets

→ File and output inclusion

These widgets include something into your page: a file, a snippet, or output of an external program.

→ include

The include widget simply reads a file and inserts its content

into some element.

The following configuration will insert the content of templates/header.html

into an element with id="header" and the content of templates/footer.html

into an element with id="footer".

[widgets.header] widget = "include" file = "templates/header.html" selector = "#header" [widgets.footer] widget = "include" file = "templates/footer.html" selector = "#footer"

This widget provides a parse option that controls whether the file is parsed

or included as a text node. Use parse = false if you want to include a file verbatim,

with HTML special characters escaped.

→ insert_html

For a small HTML snippet, a separate file may be too much. The insert_html widget

[widgets.tracking-script] widget = "insert_html" html = '<script src="/scripts/evil-analytics.js"> </script>' selector = "head"

→ exec

The exec widget executes an external program and includes its output into

an element. The program is executed in shell, so you can write a complete command

with arguments in the command option. Like the include

widget, it has a parse option that includes the output verbatim

if set to false.

Simple example: page generation timestamp.

[widgets.generated-on] widget = "exec" selector = "#generated-on" command = "date -R"

→ preprocess_element

This widget processes element content with an external program and includes its output back in the page.

Element content is sent to program's stdin, so it can be used with any program

designed to work as a pipe. HTML entities are expanded, so if you have >

or & in your page, the program gets a > and &.

By default it assumes that the program output is HTML and runs it through an HTML parser.

If you want to include its output as text (with HTML special characters escaped), you should specify parse = false

For example, this is how you can run content of <pre> elements through cat -n

to automatically add line numbers:

[widgets.line-numbers] widget = "preprocess_element" selector = "pre" command = "cat -n" parse = false

You can pass element metadata to the program for better control. The tag name is passed in the TAG_NAME

environment variable, and all attributes are passed in environment variables prefixed with ATTR:

ATTR_ID, ATTR_CLASS, ATTR_SRC...

For example, highlight, a popular syntax highlighting tool,

has a language syntax option, e.g. --syntax=python. If your elements that contain

source code samples have language specified in a class (like <pre class="language-python">),

you can extract the language from the ATTR_CLASS variable like this:

# Runs the content of <* class="language-*"> elements through a syntax highlighter [widgets.highlight] widget = "preprocess_element" selector = '*[class^="language-"]' command = 'highlight -O html -f --syntax=$(echo $ATTR_CLASS | sed -e "s/language-//")'

Like all widgets, this widget supports the action option. The default is

action = "replace_content", but using different actions you can insert a rendered version

of the content alongside the original. For example, insert an inline SVG version of every Graphviz

graph next to the source, and then highlight the source:

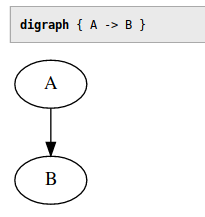

[widgets.graphviz-svg] widget = 'preprocess_element' selector = 'pre.language-graphviz' command = 'dot -Tsvg' action = 'insert_after' [widgets.highlight] after = "graphviz-svg" widget = "preprocess_element" selector = '*[class^="language-"]' command = 'highlight -O html -f --syntax=$(echo $ATTR_CLASS | sed -e "s/language-//")'

The result will look like this:

Note: this widget supports multiple selectors, e.g. selector = ["pre", "code"].

→ Environment variables

External programs executed by exec and preprocess_element widgets get

a few useful environment variables:

-

PAGE_FILE -

Path to the page source file, relative to the current working directory

(e.g.

site/index.html -

TARGET_DIR - The directory where the rendered page will be saved.

This is how you can include page's own source into a page, on a UNIX-like system:

[widgets.page-source] widget = "exec" selector = "#page-source" parse = false command = "cat $PAGE_FILE"

If you store your pages in git, you can get a page timestamp from the git log with a similar method (note that it's not a very fast operation for long commit histories):

[widgets.last-modified] widget = "exec" selector = "#git-timestamp" command = "git log -n 1 --pretty=format:%ad --date=format:%Y-%m-%d -- $PAGE_FILE"

The PAGE_FILE variable can be used in many different ways,

for example, you can use it to fetch the page author and modification date

from a revision control system like git or mercurial.

The TARGET_DIR variable is useful for scripts that modify or create page assets.

For example, this snippet will create PNG images from Graphviz graphs

inside <pre class="graphviz-png"> elements and replace those pre's with relative

links to images.

[widgets.graphviz-png] widget = 'preprocess_element' selector = '.graphviz-png' command = 'dot -Tpng > $TARGET_DIR/graph_$ATTR_ID.png && echo \<img src="graph_$ATTR_ID.png"\>' action = 'replace_element'

→ Content manipulation

→ title

The title widget sets the page title, that is, the <title>

from an element with a certain selector. If there is no <title> element

in the page, this widget assumes you don't want it and does nothing.

Example:

[widgets.page-title] widget = "title" selector = "h1" default = "My Website" append = " on My Website" prepend = "Page named "

If selector is not specified, it uses the first <h1>

as the title source element by default.

The selector option can be a list. For example, selector = ["h1", "h2", "#title"]

means “use the first <h1> if the page has it, else use <h2>,

else use anything with id="title", else use default”.

Optional prepend and append parameters allow you to insert some text

before and after the title.

If there is no element matching the selector in the page, it will use the

title specified in default option. In that case the prepend

and append options are ignored.

If the title source element is absent and default title is not set, the title is left empty.

→ footnotes

The footnotes widget finds all elements matching a selector,

moves them to a designated footnotes container, and replaces them with numbered links.4

As usual, the container element can be anywhere in the page—you can have footnotes at the top if you feel like it.

[widgets.footnotes] widget = "footnotes" # Required: Where to move the footnotes selector = "#footnotes" # Required: What elements to consider footnotes footnote_selector = ".footnote" # Optional: Element to wrap footnotes in, default is <p> footnote_template = "<p> </p>" # Optional: Element to wrap the footnote number in, default is <sup> ref_template = "<sup> </sup>" # Optional: Class for footnote links, default is none footnote_link_class = "footnote" # Optional: do not create links back to original locations back_links = true # Prepends some text to the footnote id link_id_prepend = "" # Appends some text to the back link id back_link_id_append = ""

The footnote_selector option can be a list, in that case all elements

matching any of those selectors will be considered footnotes.

By default, the number in front of a footnote is a hyperlink back to the original

location. You can disable it and make footnotes one way links with back_links = false.

You can create a custom “namespace” for footnotes and reference links using the link_id_prepend and back_link_id_append options.

This makes it easier to use custom styling for those elements.

link_id_prepend = "footnote-" back_link_id_append = "-ref"

→ toc

The toc widget generates a table of contents for your page.

Table of contents is generated from the heading tags from <h1>

to <h6>.

Here is the ToC configuration from this website:

[widgets.table-of-contents] widget = "toc" # Required: where to insert the ToC selector = "#generated-toc" # Optional: minimum and maximum levels, defaults are 1 and 6 respectively min_level = 2 max_level = 6 # Optional: use <ol> instead of <ul> for ToC lists # Default is false numbered_list = false # Optional: Class for the ToC list element, default is none toc_list_class = "toc" # Optional: append the heading level to the ToC list class # In this example list for level 2 would be "toc-2" toc_class_levels = false # Optional: Insert "link to this section" links next to headings heading_links = true # Optional: text for the section links # Default is "#" heading_link_text = "→ " # Optional: class for the section links # Default is none heading_link_class = "here" # Optional: insert the section link after the header text rather than before # Default is false heading_links_append = false # Optional: use header text slugs for anchors # Default is false use_heading_slug = true # Optional: use unchanged header text for anchors # Default is false use_heading_text = false # Place nested lists inside a <li> rather than next to it valid_html = false

→ Choosing the heading anchor options

For the table of contents to work, every heading needs a unique id

attribute that can be used as an anchor.

If a heading has an id attribute, it will be used for the anchor.

If it doesn't, soupault has to generate one.

By default, if a heading has no id, soupault will generate

a unique numeric identifier for it.5

This is safe, but not very good for readers (links are non-indicative) and for people who want to share

direct links to sections (they will change if you add more sections).

If you want to find a balance between readability, permanence, and ease of maintenance, there are a few ways you can do it and the choice is yours.

The use_heading_slug = true option converts the heading text

to a valid HTML identifier. Right now, however, it's very aggressive

and replaces everything other than ASCII letters and digits with hyphens.

This is obviously a no go for non-ASCII languages, that is, pretty much

all languages in the world. It may be implemented more sensibly in the future.

The use_heading_text = true option uses unmodified heading text

for the id, with whitespace and all. This is against the rules of HTML,

but seems to work well in practice.

Note that use_heading_slug and use_heading_text

do not enforce uniqueness.

All in all, for best link permanence you should give every heading a unique id

by hand, and for best readability you may want to go with use_heading_text = true.

→ breadcrumbs

The breadcrumbs widget generates breadcrumbs for the page.

The only required parameter is selector, the rest is optional.

[widgets.breadcrumbs] widget = "breadcrumbs" selector = "#breadcrumbs" prepend = ".. / " append = " /" between = " / " breadcrumb_template = "<a class="\"nav\""></a>" min_depth = 1

The breadcrumb_template is an HTML snippet that can be used

for styling your breadcrumbs.

It must have an <a> element in it.

By default, a simple unstyled link is used.

The min_depth sets the minimum nesting depth where breadcrumbs

appear. That's the length of the logical navigation path rather than directory path.

There is a fixup that decrements the path for section index pages, that is, pages namedindex

by default, or whatever is specified in the index_page option.

Their navigation path is considered one level shorter than any other page in the section,

when clean URLs are used. This is to prevent section index pages from having links

to themselves.

-

site/index.html→ 0 -

site/foo/index.html→ 0 (sic!) -

site/foo/bar.html→ 1

→ HTML manipulation

→ delete_element

The opposite of insert_html. Deletes an element that matches a selector. It can be useful in two situations:

- Another widget may leave an element empty and you want to clean it up.

- Your pages are generated with another tool and it inserts something you don't want.

# Who reads footers anyway? [widgets.delete_footer] widget = "delete_element" selector = "#footer"

You can limit it to deleting only empty elements with only_if_empty = true option.

Element is considered empty if there's nothing but whitespace inside it.

It's possible to delete only the first element matching a selector by adding delete_all = false

to its config.

→ Plugins

Since version 1.2, soupault can be extended with Lua plugins. Currently there are following limitations:

-

The supported language is Lua 2.5, not modern Lua 5.x. That means no closures and no

forloops in particular. Here's a copy of the Lua 2.5 reference manual. -

Only string and integer options can be passed to plugins via widget options from

soupault.conf

Plugins are treated like widgets and are configured the same way.

You can find ready to use plugins in the Plugins section on this site.

→ Installing plugins

→ Plugin discovery

By default, soupault looks for plugins in the plugins/ directory.

Suppose you want to use the Site URL plugin.

To use that plugin, save it to plugins/site-url.lua. Then a widget named site-url

will automatically become available.

The site_url option from the widget config

will be accessible to the plugin as config["site_url"].

[widgets.absolute-urls] widget = "site-url" site_url = "https://www.example.com"

You can specify multiple plugin directories using the plugin_dirs option under [settings]:

[settings] plugin_dirs = ["plugins", "/usr/share/soupault/plugins"]

If a file with the same name is found in multiple directories, soupault will use the file from the first directory in the list.

You can also disable plugin discovery and load all plugins explicitly.

[settings] plugin_discovery = false

→ Explicit plugin loading

You can also load plugins explicitly. This can be useful if you want to:

- load a plugin from an unusual directory

- use a widget name not based on the plugin file name

- override a built-in widget with a plugin

Suppose you want to associate the site-url.lua plugin with absolute-links widget name. Add this snippet to soupault.conf:

[plugins.absolute-links] file = "plugins/site-url.lua"

It will register the plugin as a widget named absolute-links.

Then you can use it like any other widget. Plugin subtable name becomes the name of the widget,

in our case absolute-links.

[widgets.make-urls-absolute] widget = "absolute-links" site_url = "https://www.example.com"

If you want to write your own plugins, read on.

→ Plugin example

Here's the source of that Site URL plugin that converts relative links to absolute URLs by prepending a site URL to them:

-- Converts relative links to absolute URLs -- e.g. "/about" -> "https://www.example.com/about" -- Get the URL from the widget config site_url = config["site_url"] if not Regex.match(site_url, "(.*)/$") then site_url = site_url .. "/" end links = HTML.select(page, "a") -- Lua array indices start from 1 local index = 1 while links[index] do link = links[index] href = HTML.get_attribute(link, "href") if href then -- Check if URL schema is present if not Regex.match(href, "^([a-zA-Z0-9]+):") then -- Remove leading slashes href = Regex.replace(href, "^/*", "") href = site_url .. href HTML.set_attribute(link, "href", href) end end index = index + 1 end

In short:

-

Widget options can be retrieved from the

confighash table. -

The element tree of the page is in the

pagevariable. You can think of it as an equivalent ofdocumentin JavaScript. -

HTML.select()function is likedocument.querySelectorAllin JS. -

The

HTMLmodule provides an API somewhat similar to DOM API in browsers, though it's procedural rather than object-oriented.

→ Plugin environment

Plugins have access to the following global variables:

-

page -

The page element tree that can be manipulated with functions from the

HTMLmodule. -

page_file -

Ppage file path, e.g.

site/index.html -

target_dir -

The directory where the page file will be saved, e.g.

build/about/. -

nav_path -

List of strings representing the logical nativation path. For example, for

site/foo/bar/quux.htmlit's["foo", "bar"]. -

page_url -

Relative page URL, e.g.

/articlesor/articles/index.html, depending on theclean_urlssetting. -

config - A table with widget config options.

Note: only string and integer options can be passed to plugins through the config table.

You cannot pass TOML lists or inline tables to plugins in the current soupault version.

You sort of can pass booleans, but they will be converted to strings "true" and "false",

so you need to compare explicitly.

→ Plugin API

Apart from the standard Lua 2.5 functions, soupault provides additional modules.

→ The HTML module

→ HTML.parse(string)

Example: h = HTML.parse("<p>hello world<p>")

Parses a string into an HTML element tree

→ HTML.create_element(tag, text)

Example: h = HTML.create_element("p", "hello world")

Creates an HTML element node.

→ HTML.create_text(string)

Example: h = HTML.create_text("hello world")

Creates a text node that can be inserted into the page just like element nodes. This function automatically escapes all HTML special characters inside the string.

→ HTML.inner_html(html)

Example: h = HTML.inner_html(HTML.select(page, "body"))

Returns element content as a string.

→ HTML.strip_tags(html)

Example: h = HTML.strip_tags(HTML.select(page, "body"))

Returns element content as a string, with all HTML tags removed.

→ HTML.select(html, selector)

Example: links = HTML.select(page, "a")

Returns a list of elements matching specified selector.

→ HTML.select_one(html, selector)

content_div = HTML.select(page, "div#content")

Returns the first element matching specified selector, or nil if none are found.

→ HTML.select_any_of(html, selectors)

Example: link_or_pic = HTML.select_any_of(page, {"a", "img"})

Returns the first element matching any of specified selectors.

→ HTML.select_all_of(html, selectors)

Example: links_and_pics = HTML.select_all_of(page, {"a", "img"})

Returns all elements matching any of specified selectors.

Returns element's parent.

Example: if there's an element that has a <blink> in it, insert a warning just before that element.

blink_elem = HTML.select_one(page, "blink") if elem then parent = HTML.parent(blink_elem) warning = HTML.create_element("p", "Warning: blink element ahead!") HTML.insert_before(parent, warning) end

→ Access to surrounding elements

This family of functions provides access to element's neighboring elements in the tree. They all return a (possibly empty) list.

-

HTML.children -

HTML.ancestors -

HTML.descendants -

HTML.siblings

Example: add class="silly-class" to every element inside the page <body>.

body = HTML.select_one(page, "body") children = HTML.children(body) local i = 1 while children[i] do if HTML.is_element(children[i]) then HTML.add_class(children[i], "silly-class") end i = i + 1 end

→ HTML.is_element

Web browsers provide a narrower API than general purpose HTML parsers. In the JavaScript DOM API, element.children

provides access to all child elements of an element.

However, in the HTML parse tree, the picture is more complex. Text nodes are also child nodes—browsers just filter those out because JavaScript code rarely has a need to do anything with text nodes.

Consider this HTML: <p>This is a <em>great</em> paragraph</p>. How many children does

the <p> element have? In fact, three: text("This is a "), element("em", "great"), text(" paragraph").

The goal of soupault is to allow modifying HTML pages in any imagineable ways, so it cannot ignore this complexity. Many operations like

HTML.add_class still make no sense for text nodes, so there has to be a way to check if something is an element or not.

That's where HTML.is_element comes in handy.

→ HTML.get_attribute(html_element, attribute)

Example: href = HTML.get_attribute(link, "href")

Returns the value of an element attribute. The first argument must be an element reference

produced by HTML.select/HTML.select_one/HTML.select_element

If the attribute is missing, it returns nil. If the attribute is present but

its value is empty (like in <elem attr=""> or <elem attr>),

it returns an empty string. In Lua, both empty string and nil are false for the purpose of if value then ... end,

so if you want to check for presence of an attribute regardless of its value, you should

explicitly check for nil.

→ HTML.set_attribute(html_element, attribute)

Example: HTML.set_attribute(content_div, "id", "content")

Sets an attribute value. The first argument must be an element reference

produced by HTML.select/HTML.select_one/HTML.select_element

→ HTML.add_class(html_element, class_name)

Example: HTML.add_class(p, "centered")

Adds a class="class_name" attribute. The first argument must be an element reference

produced by HTML.select/HTML.select_one/HTML.select_element

→ HTML.remove_class(html_element, class_name)

Example: HTML.remove_class(p, "centered")

Adds a class="class_name" attribute. The first argument must be an element reference

produced by HTML.select/HTML.select_one/HTML.select_element

→ HTML.get_tag_name(html_element)

Returns the tag name of an element.

Example: link_or_pic = HTML.select_any_of(page, {"a", "img"}); tag_name = HTML.get_tag_name(link_of_pic)

→ HTML.set_tag_name(html_element)

Changes the tag name of an element.

Example: ”modernize” <blink> elements by converting them to <span class="blink">.

blinks = HTML.select(page, "blink") local i = 1 while blinks[i] do elem = blinks[i] HTML.set_tag_name(elem, "span") HTML.add_class(elem, "blink") i = i + 1 end

→ HTML.append_child(parent, child)

Example: HTML.append_child(page, HTML.create_element("br"))

Appends a child element to the parent.

Apart from HTML.append_child, there's a family of functions that insert an element at a different position in the tree:

HTML.prepend_child, HTML.insert_before, HTML.insert_after, HTML.replace_element,

and HTML.replace_content.

→ HTML.delete(html_element)

Example: HTML.delete(HTML.select_one(page, "h1"))

Deletes an element from the page. The argument must be an element reference returned by a select function.

→ HTML.delete_content(html_element)

Deletes all children of an element (but leaves the element itself in place).

→ HTML.clone_content(html_element)

Creates a new element tree from the content of an element.

Useful for duplicating an element elsewhere in the page.

→ HTML.get_heading_level(html_element)

For elements whose tag name matches <h[1-9]> pattern, returns the heaving level.

Returns zero for elements whose tag name doesn't look like a heading and for values that aren't HTML elements.

→ HTML.get_headings_tree(html_element)

Returns a table that represents the tree of HTML document headings in a format like this:.

[

{

"heading": ...,

"children": [

{"heading": ..., "children": []}

]

},

{"heading": ..., "children": []}

]

Values of the heading fields are HTML element references.

→ Behaviour

If an element tree access function cannot find any elements (e.g. there are no elements that match a selector),

it returns nil.

If a function that expects an HTML element receives nil, it immediately returns nil,

so you don't need to check for nil at every step and can safely chain calls to those functions.

→ The Regex module

Regular expressions used by this module are mostly Perl-compatible. Capturing groups and back references are not supported.

→ Regex.match(string, regex)

Example: Regex.match("/foo/bar", "^/")

Checks if a string matches a regex.

→ Regex.find_all(string, regex)

Example: matches = Regex.find_all("/foo/bar", "([a-z]+)")

Returns a list of substrings matching a regex.

→ Regex.replace(string, regex, string)

Example: s = Regex.replace("/foo/bar", "^/", "")

Replaces the first occurence of a matching substring. It returns a new string and doesn't modify the argument.

→ Regex.replace_all(string, regex, string)

Example: Regex.replace_all("/foo/bar", "/", "")

Replaces every matching substring. It returns a new string and doesn't modify the argument.

→ Regex.split(string, regex)

Example: substrings = Regex.find_all("foo/bar", "/")

Splits a strings at a separator.

→ The Plugin module

Provides functions for communicating with the plugin runner code.

→ Plugin.fail

Example: Plugin.fail("Error occured")

Stops plugin execution immediately and signals an error. Errors raised this way are treated as widget processing errors by soupault, for the purpose of the strict option.

→ Plugin.exit

Example: Plugin.exit("Nothing to do"), Plugin.exit()

Stops plugin execution immediately. The message is optional. This kind of termination is not considered an error by soupault.

→ Plugin.require_version

Example: Plugin.require_version("1.8.0")

Stops plugin execution if soupault version is less than required version. You can give a full version like 1.9.0 or a short version like 1.9. This function was introduced in 1.8, so plugins that use it will fail to work in 1.7 and below.

→ The Sys module

→ Sys.read_file

Example: Sys.read_file("site/index.html")

Reads a file into a string. The path is relative to the working directory.

→ Sys.run_program(command)

Executes given command in the system shell (/bin/sh on UNIX, cmd.exe on Windows).

The output of the command is ignored. If command fails, its stderr is logged.

Example: creating a silly file in the directory where generated page will be stored.

res = Sys.run_program(format("echo \"Kilroy was here\" > %s/graffiti", target_dir)) if not res then Log.warning("Damn, busted") end

The intended use case for it is creating and processing assets, e.g. converting images to different formats.

→ Sys.get_program_output(command)

Executes a command in the system shell and returns its output.

If the command fails, it returns nil. The stderr is shown in the execution log,

but there's no way a plugin can access its stderr or the exit code.

Example: getting the last mofidication date of a page from git.

git_command = "git log -n 1 --pretty=format:%ad --date=format:%Y-%m-%d -- " .. page_file timestamp = Sys.get_program_output(git_command) if not timestamp then timestamp = "1970-01-01" end

→ Sys.random(max)

Example: Sys.ranrom(1000)

Generates a random number from 0 to max.

→ Sys.is_unix ()

Returns true on UNIX-like systems (Linux, Mac OS, BSDs), false otherwise.

→ Sys.is_windows ()

Returns true on Microsoft Windows, false otherwise.

→ The String module

→ String.trim

Example: String.trim(" my string ") -- produces "my string"

Removed leading and trailing whitespace from a string.

→ String.slugify_ascii

Example: String.slugify_ascii("My Heading") -- produces "my-heading"

Replaces all characters other than English letters and digits with hyphens, exactly like the ToC widget.

→ String.truncate(string, length)

Truncates a string to given length.

Example: String.truncate("foobar", 3) -- "foo".

→ String.to_number

Example: String.to_number("2.7") -- produces 2.7 (float)

Converts strings to numbers. Returns nil is a string isn't a valid representation of a number.